In the bustling city of Dar es Salaam, where time races alongside its vibrant energy, an incident recently unfolded that sent shockwaves through the digital landscape. As I made my way to the office on another eventful morning, a colleague shared a tweet with a link to The Citizen, igniting a wave of curiosity and concern. The article chronicled the extraordinary tale of Sayida Masanja, a businessman from Shinyanga, Tanzania, who found himself entangled in a legal battle with telecommunications giant Vodacom Tanzania Plc. Vodacom Sued for Data Sharing; His accusation? A staggering 10 billion Tanzanian shillings, equivalent to approximately $4.3 million, for allegedly unauthorised sharing of his personal data with an artificial intelligence (AI) chatbot.

Vodacom Sued for Data Sharing with ChatGPT

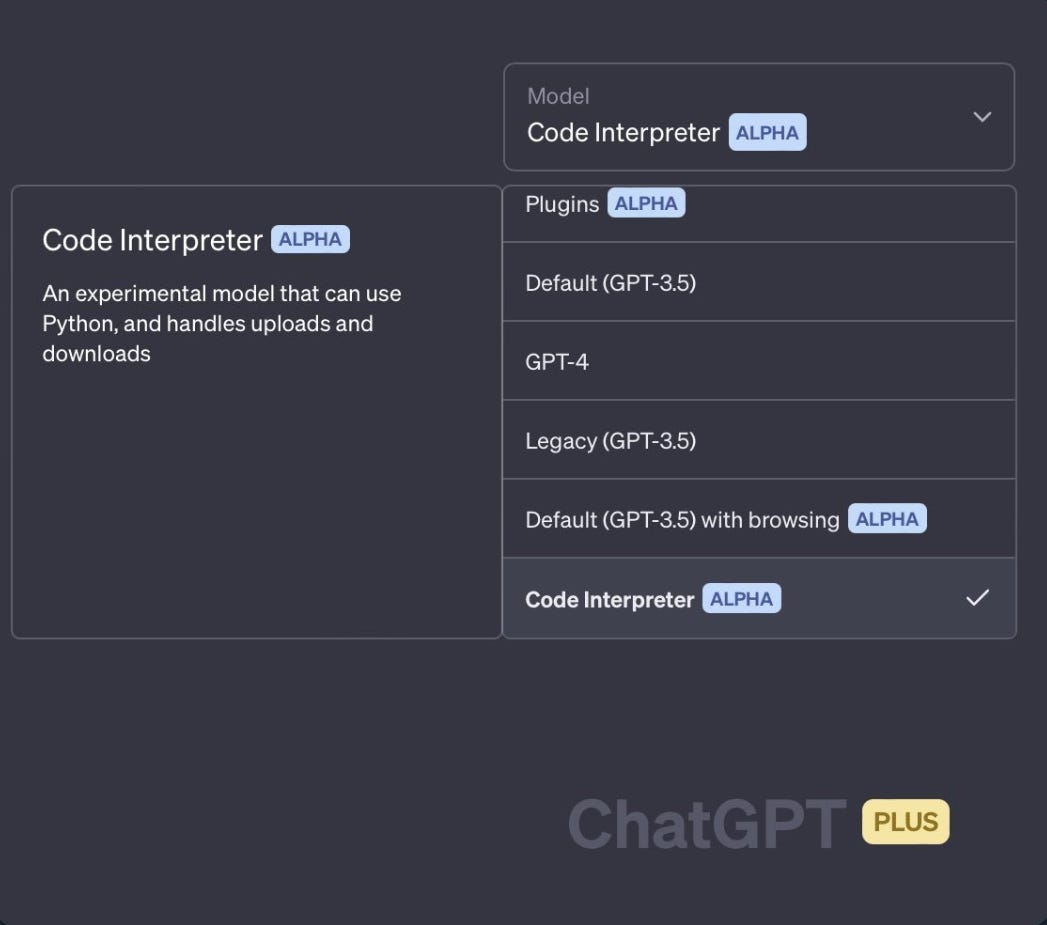

In Masanja’s case, asserting a profound loss of privacy stemming from Vodacom’s purported failure to safeguard his valuable data. According to his claims, the company allowed a third party to gain unauthorised access to his proprietary network information, an egregious breach that struck at the heart of his personal security. What made this revelation all the more disconcerting was the realisation that the AI chatbot implicated in this alleged violation was none other than ChatGPT, a remarkable creation known for its human-like intelligence and capacity to engage in seemingly genuine conversations.

Masanja’s discovery of his own personal data nestled within the vast reaches of ChatGPT was nothing short of a chilling revelation. Calls, SIM identity, Personally Identifiable Information (PIIs), and even various locations had been laid bare within the digital realm. Vodacom, however, fervently denied these grave accusations, adamantly refuting any connection to ChatGPT or the facilitation of unauthorised data access. Their defence rested on an argument of jurisdiction, asserting that the case had been erroneously filed in the wrong court. With the legal proceedings scheduled for September, the fate of this intriguing battle hung in the balance.

As news of this unsettling incident spread, it cast a stark spotlight on the shadows lurking beneath the surface of technological advancements. The fundamental question echoed through the minds of many: Are we truly prepared for the revolutionary power of AI? This case, irrespective of its outcome, served as a piercing alarm, jolting us into contemplating the inherent risks and profound implications that accompany the dazzling rise of AI technology. The very tools designed to empower and assist us now reveal a darker side, reminding us of the delicate balance that must be struck between progress and safeguarding our most cherished possessions – our privacy and personal information.

Navigating the AI Abyss: Unveiling the Challenges and Data Privacy Quandaries

In the realm of rapid technological advancement, the rise of AI has ignited both awe and apprehension. As AI systems like ChatGPT demonstrate astonishing capabilities, they bring to the forefront a myriad of challenges that demand our attention and thoughtful consideration. From the ethical implications that arise from AI’s potential to amplify biases and propagate misinformation to the critical issue of data privacy in an era of extensive data collection, the path forward is paved with complex obstacles. Here are some major concerns around the explosion of AI systems.

- Ethical implications: One of the major concerns surrounding the revolution of AI, as exemplified by ChatGPT, is the ethical implications of its use. AI systems can amplify biases, propagate misinformation, or be used for malicious purposes. The responsibility to ensure ethical AI falls on developers and policymakers.

- Data privacy: AI models like ChatGPT require extensive data for training, which raises concerns about data privacy. Users interacting with AI systems may unknowingly disclose sensitive information, and there is a need for robust data protection measures to prevent misuse or unauthorised access to personal data.

- Lack of transparency: AI models are often considered black boxes, meaning they produce results without clear explanations. This lack of transparency can be problematic when it comes to decision-making systems or critical applications where accountability and understanding the decision-making process are essential.

- Job displacement and economic impact: The rise of AI technologies, including advanced language models like ChatGPT, raises concerns about job displacement and its impact on the workforce. There is a fear that AI automation could replace certain job roles, leading to unemployment and potential economic inequality.

- Bias and fairness: AI systems can inherit biases present in the data used for training, resulting in biased outputs. This bias can have negative consequences, such as discriminatory decision-making or reinforcing existing societal prejudices. Ensuring fairness and addressing bias in AI models like ChatGPT is a critical concern.

- Security risks: AI systems can be vulnerable to security threats and malicious attacks. Adversarial attacks, where AI models are manipulated to produce incorrect or harmful outputs, highlight the need for robust security measures to protect AI systems from exploitation.

- Dependence and overreliance: As AI becomes more advanced, there is a concern about excessive dependence and overreliance on AI systems like ChatGPT. Relying heavily on AI for critical decision-making or complex tasks without appropriate human oversight can lead to errors or loss of control.

- Regulation and governance: The rapid development of AI technology has outpaced regulatory frameworks and governance mechanisms. Establishing regulations, standards, and guidelines for the responsible development and deployment of AI, including language models like ChatGPT, is crucial to mitigate risks and ensure public trust.

- Long-term societal impact: The revolution of AI, including the development of powerful language models like ChatGPT, has the potential for profound long-term societal impacts. It is important to carefully consider the social, economic, and cultural consequences of widespread AI adoption and ensure that benefits are distributed equitably.

- Human-AI interaction: The increasing integration of AI systems like ChatGPT in daily life raises concerns about the quality of human-AI interaction. Striking the right balance between AI and human involvement, maintaining user agency, and avoiding the erosion of human skills and empathy are important considerations.

Privacy Considerations When Dealing with AI: Safeguarding Individuals, Corporations, and Governments

Today, we will only plunge into the depths of the vast ocean that is AI, specifically honing in on the intricate world of data privacy and its intersection with AI. While we delve into this vital topic, it is important to note that the journey doesn’t end here. In the forthcoming articles, we will continue our expedition, immersing ourselves in each of the challenges that permeate the AI landscape. From the ethical implications to the lack of transparency, job displacement to bias and fairness, security risks to the quest for regulation and governance, the long-term societal impact, and the delicate dynamics of human-AI interaction, each facet merits its own dedicated exploration.

On the Privacy front, Individuals, corporations, and governments find themselves at the crossroads of a transformative era as AI technologies reshape our lives and institutions. Amid this technological revolution, privacy considerations emerge as a paramount concern, necessitating careful navigation and robust safeguards.

Drawing inspiration from the recent Vodacom case, we explore the challenges and offer potential solutions for each stakeholder in the realm of AI and privacy.

For individuals, the growing reliance on AI systems like ChatGPT raises concerns about unwittingly disclosing sensitive information. The Vodacom case (whatever the outcome) serves as a stark reminder of the risks posed by potential unauthorised data sharing. To address this, individuals must be empowered with greater awareness and control over their data. Enhanced transparency, clear consent mechanisms, and accessible privacy settings can provide individuals with the agency to make informed choices about their personal information’s use.

Corporations, as custodians of vast amounts of personal data, bear a profound responsibility to protect user privacy. The case where Vodacom Sued for Data Sharing highlights the potential consequences of data mishandling. To build trust, corporations must prioritise robust data protection measures. Implementing comprehensive privacy policies, employing encryption technologies, conducting regular audits, and fostering a culture of privacy awareness can safeguard user data while demonstrating a commitment to privacy best practices.

Governments play a pivotal role in establishing the legal and regulatory frameworks necessary to protect privacy in the AI era. The above case where Vodacom Sued for Data Sharing, with its legal ramifications, underscores the need for comprehensive data protection laws and effective enforcement mechanisms. Governments must enact privacy legislation that strikes a delicate balance, safeguarding individual privacy rights while enabling responsible AI innovation. Additionally, fostering collaboration between public and private sectors can promote the development of privacy-preserving technologies and encourage transparency and accountability. And here raises the other concern, does the judiciary and other relevant government bodies even understand the AI world? But this is a story for another day.

Ultimately, a collective effort is required to address privacy concerns in the realm of AI. Individuals must stay informed and exercise their privacy rights, corporations must prioritise data protection, and governments must enact robust privacy laws. By fostering a privacy-first mindset, we can navigate the AI landscape while preserving individual liberties and societal trust. Through continuous dialogue and collaboration, we can build a future where the benefits of AI coexist harmoniously with the protection of personal privacy.